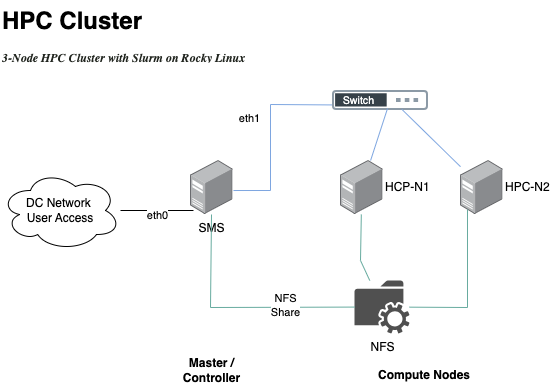

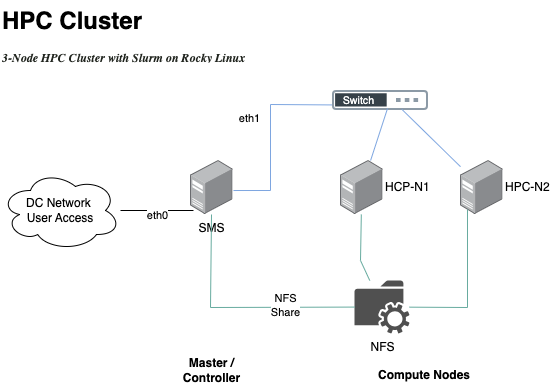

High-Performance Computing (HPC) clusters are essential for complex computations in scientific research, engineering, and data analysis. Setting up an HPC cluster may seem daunting, but with the right guidance, it becomes an achievable task even in a test lab environment. In this post, we’ll walk through setting up a 3-node HPC cluster using Rocky Linux and Slurm as the workload manager.

Table of Contents

- Introduction

- Prerequisites

- Step 1: Install Rocky Linux on All Nodes

- Step 2: Configure Network Settings

- Step 3: Configure SSH Key-Based Authentication

- Step 4: Install Necessary Software Packages

- Step 5: Configure NFS for Shared Storage

- Step 6: Configure Slurm Workload Manager

- Step 7: Test the HPC Cluster

- Troubleshooting Common Issues

- Conclusion

- Additional Resources

Introduction

This guide aims to help you set up a 3-node HPC cluster using Rocky Linux, an enterprise-level operating system that is binary-compatible with Red Hat Enterprise Linux. We’ll use Slurm (Simple Linux Utility for Resource Management) as our workload manager, which is widely adopted in HPC environments.

Prerequisites

Hardware Requirements

- Three Physical or Virtual Machines:

- Head Node (SMS): Acts as the master node controlling the cluster.

- Compute Nodes (HPC-N1, HPC-N2): Perform computational tasks.

- Network Equipment:

- A network switch or router to connect all nodes.

- Ethernet cables for connectivity.

- Minimum Specifications for Each Node:

- CPU: 2 cores or more.

- RAM: 4 GB or more.

- Storage: 20 GB or more free disk space.

Software Requirements

- Operating System:

- Rocky Linux 8 installed on all nodes.

- User Account:

- A non-root user with

sudoprivileges on all nodes.

- A non-root user with

- Software Packages:

- OpenSSH server and client.

- Development tools (GCC, make, etc.).

- MPI libraries (OpenMPI).

- Slurm Workload Manager.

- NFS utilities for shared storage.

Step 1: Install Rocky Linux on All Nodes

- Download Rocky Linux ISO:

- Obtain the latest Rocky Linux 8 ISO from the official website.

- Install Rocky Linux:

- Boot each machine from the ISO and follow the installation prompts.

- Choose a minimal installation to reduce unnecessary packages.

- Set up the root password and create a non-root user with

sudoprivileges.

- Update the System:

sudo dnf update -y

Step 2: Configure Network Settings

Assign Static IP Addresses

- Identify Network Interfaces:

ip addr- Note the interface name (e.g.,

ens33,eth0).

- Note the interface name (e.g.,

- Configure Static IP on Each Node:Edit Network Configuration:

sudo nmcli con mod "System eth0" ipv4.addresses 192.168.1.X/24 ipv4.gateway 192.168.1.1 ipv4.dns "8.8.8.8" ipv4.method manual- Replace

eth0with your interface name. - Replace

192.168.1.Xwith the desired IP address for each node.

- Replace

- Restart Network Manager:

sudo systemctl restart NetworkManager

Set Hostnames

- Set Hostnames on Each Node:Head Node:

sudo hostnamectl set-hostname SMSCompute Nodes:

sudo hostnamectl set-hostname HPC-N1 sudo hostnamectl set-hostname HPC-N2 - Verify Hostnames:

hostname

Update /etc/hosts File

- Edit

/etc/hostson All Nodes:sudo nano /etc/hosts- Add the following entries (adjust IPs accordingly):

192.168.1.100 SMS 192.168.1.101 HPC-N1 192.168.1.102 HPC-N2

- Add the following entries (adjust IPs accordingly):

- Ensure Consistency:

- The

/etc/hostsfile should be identical on all nodes.

- The

Step 3: Configure SSH Key-Based Authentication

- Generate SSH Key on the Head Node:

ssh-keygen -t rsa -b 4096- Press Enter through the prompts to accept defaults and set no passphrase.

- Copy SSH Key to Compute Nodes:

ssh-copy-id HPC-N1 ssh-copy-id HPC-N2- Enter the password when prompted.

- Test SSH Access:

ssh HPC-N1 exit ssh HPC-N2 exit- You should be able to log in without a password.

Step 4: Install Necessary Software Packages

Install Development Tools

On All Nodes:

sudo dnf groupinstall "Development Tools" -y

sudo dnf install epel-release -y

sudo dnf install nano wget curl git -y

Install MPI Libraries

Install OpenMPI on All Nodes:

sudo dnf install openmpi openmpi-devel -y

Set Environment Variables:

Add to .bashrc:

echo 'export PATH=$PATH:/usr/lib64/openmpi/bin' >> ~/.bashrc

echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/lib64/openmpi/lib' >> ~/.bashrc

Reload .bashrc:

source ~/.bashrc

Install Slurm Workload Manager

- Add Slurm Repository:

sudo dnf install https://download.schedmd.com/slurm/slurm-20.11.9-1.el8.x86_64.rpm -y- Replace the URL with the latest Slurm RPM for Rocky Linux 8.

- Install Slurm Packages:On Head Node:

sudo dnf install slurm slurm-slurmctld -yOn Compute Nodes:

sudo dnf install slurm slurm-slurmd -y

Step 5: Configure NFS for Shared Storage

Sharing directories like /home via NFS allows users and applications to access the same files across all nodes.

Set Up NFS Server on the Head Node

- Install NFS Utilities:

sudo dnf install nfs-utils -y - Configure Exports:

sudo nano /etc/exports- Add the following lines:

/home *(rw,sync,no_root_squash,no_subtree_check)

- Add the following lines:

- Start NFS Services:

sudo systemctl enable --now nfs-server rpcbind - Export File Systems:

sudo exportfs -a

Mount NFS Shares on Compute Nodes

- Install NFS Utilities:

sudo dnf install nfs-utils -y - Create Mount Points:

sudo mkdir -p /home - Edit

/etc/fstab:sudo nano /etc/fstab- Add the following lines:

SMS:/home /home nfs defaults 0 0

- Add the following lines:

- Mount NFS Shares:

sudo mount -a - Verify Mounts:

df -h

Step 6: Configure Slurm Workload Manager

Configure MUNGE Authentication

MUNGE (MUNGE Uid ‘N’ Gid Emporium) is an authentication service for creating and validating credentials.

- Install MUNGE on All Nodes:

sudodnf --enablerepo=devel install munge-develsudo dnf install munge munge-libs munge-devel -y - Generate MUNGE Key on Head Node:

sudo /usr/sbin/create-munge-key - Copy MUNGE Key to Compute Nodes:

sudo scp /etc/munge/munge.key HPC-N1:/etc/munge/ sudo scp /etc/munge/munge.key HPC-N2:/etc/munge/ - Set Permissions on All Nodes:

sudo chown munge: /etc/munge/munge.key sudo chmod 400 /etc/munge/munge.key - Start MUNGE Service on All Nodes:

sudo systemctl enable --now munge - Test MUNGE:

munge -n | unmunge | grep STATUS- Output should be

STATUS: Success (0).

- Output should be

Configure Slurm on the Head Node

- Create Slurm User and Directories:

sudo useradd slurm sudo mkdir -p /var/spool/slurmctld sudo chown slurm: /var/spool/slurmctld sudo touch /var/log/slurmctld.log sudo chown slurm: /var/log/slurmctld.log - Edit Slurm Configuration File:

sudo nano /etc/slurm/slurm.conf- Basic

slurm.confExample (You can edit as required):ClusterName=mycluster ControlMachine=SMS MpiDefault=none ProctrackType=proctrack/pgid ReturnToService=2 SlurmctldPidFile=/var/run/slurmctld.pid SlurmctldPort=6817 SlurmdPidFile=/var/run/slurmd.pid SlurmdPort=6818 SlurmdSpoolDir=/var/spool/slurmd SlurmUser=slurm StateSaveLocation=/var/spool/slurmctld SwitchType=switch/none TaskPlugin=task/none SchedulerType=sched/backfill SelectType=select/cons_res SelectTypeParameters=CR_Core_Memory SlurmctldDebug=info SlurmctldLogFile=/var/log/slurmctld.log SlurmdDebug=info SlurmdLogFile=/var/log/slurmd.log NodeName=HPC-N[1-2] CPUs=2 RealMemory=2000 State=UNKNOWN PartitionName=debug Nodes=HPC-N[1-2] Default=YES MaxTime=INFINITE State=UP - Set

MailProg:MailProg=/bin/true

- Basic

- Save and Exit the File.

Distribute Slurm Configuration to Compute Nodes

- Create Slurm User and Directories on Compute Nodes:

sudo useradd slurm sudo mkdir -p /var/spool/slurmd sudo chown slurm: /var/spool/slurmd sudo touch /var/log/slurmd.log sudo chown slurm: /var/log/slurmd.log - Copy

slurm.confto Compute Nodes:sudo scp /etc/slurm/slurm.conf HPC-N1:/etc/slurm/ sudo scp /etc/slurm/slurm.conf HPC-N2:/etc/slurm/

Start Slurm Services

- Start Slurm Controller on Head Node:

sudo systemctl enable --now slurmctld - Start Slurm Daemon on Compute Nodes:

sudo systemctl enable --now slurmd - Verify Slurm Services:

- On Head Node:

sinfo- Should display the nodes and their states.

- On Head Node:

Step 7: Test the HPC Cluster

Compile a Test MPI Program

- Create an MPI “Hello World” Program:

nano mpi_hello.c- mpi_hello.c:

#include <mpi.h> #include <stdio.h> int main(int argc, char** argv) { MPI_Init(NULL, NULL); int world_size; MPI_Comm_size(MPI_COMM_WORLD, &world_size); int world_rank; MPI_Comm_rank(MPI_COMM_WORLD, &world_rank); char processor_name[MPI_MAX_PROCESSOR_NAME]; int name_len; MPI_Get_processor_name(processor_name, &name_len); printf("Hello world from processor %s, rank %d out of %d processors\n", processor_name, world_rank, world_size); MPI_Finalize(); return 0; }

- mpi_hello.c:

- Compile the Program:

mpicc mpi_hello.c -o mpi_hello - Create a Slurm Job Script:

nano mpi_job.sh- mpi_job.sh:

#!/bin/bash #SBATCH --job-name=mpi_test #SBATCH --output=mpi_test.out #SBATCH --nodes=2 #SBATCH --ntasks-per-node=2 #SBATCH --time=00:05:00 srun ./mpi_hello

- mpi_job.sh:

- Submit the Job:

sbatch mpi_job.sh - Monitor the Job:

squeue - Check the Output:

cat mpi_test.out- You should see output from all ranks across the nodes like this

Troubleshooting Common Issues

Issue 1: Unable to Contact Slurm Controller

Error Message:

slurm_load_partitions: Unable to contact slurm controller (connect failure)

Solution:

- Ensure

slurmctldis running on the head node:sudo systemctl status slurmctld - Check

slurm.conffor correctControlMachine:- Ensure it matches the head node’s hostname.

- Verify MUNGE is running on all nodes:

sudo systemctl status munge - Ensure firewalls are not blocking Slurm ports (

6817,6818):sudo firewall-cmd --permanent --add-port=6817-6818/tcp sudo firewall-cmd --reload

Issue 2: Configured MailProg is Invalid

Error Message:

error: Configured MailProg is invalid

Solution:

- Install the

mailxpackage:sudo dnf install mailx -y - Update

slurm.conf:- Set

MailProgto the correct path or disable it:MailProg=/bin/true

- Set

- Restart

slurmctld:sudo systemctl restart slurmctld

Issue 3: Cluster Name Mismatch

Error Message:

fatal: CLUSTER NAME MISMATCH.

Solution:

- Stop

slurmctld:sudo systemctl stop slurmctld - Remove old state files:

sudo rm -rf /var/spool/slurmctld/* - Ensure

ClusterNameis consistent inslurm.conf:ClusterName=mycluster - Restart

slurmctld:sudo systemctl start slurmctld

Conclusion

Setting up an HPC cluster with Slurm on Rocky Linux involves several steps, from configuring network settings to installing and configuring essential services like MUNGE and Slurm. By carefully following this guide and addressing common issues, you can build a functional HPC test lab that provides a platform for exploring parallel computing and cluster management.

Additional Resources

- Rocky Linux Documentation: https://docs.rockylinux.org/

- Slurm Official Documentation: https://slurm.schedmd.com/documentation.html

- OpenMPI Official Documentation: https://www.open-mpi.org/doc/

- MUNGE Documentation: https://dun.github.io/munge/

- HPC Admin Tutorials: https://www.hpcadvisorycouncil.com/

Note: Always ensure your systems are secure by following best practices, such as keeping software up to date, using strong passwords, and limiting access to authorized users.

Happy Computing!

Comments

5 responses to “

HPC Cluster

Image credit: Affan Javid

”

What if I update my Rocky Linux to version 9.x? Is there anything important that I need to take note of?

Its good to check the packages required in update and study the update impact. All the packages are installed using dnf and things go smooth for me most of the times. for a bad day we should keep backups. Good Luck!

Did you encounter a situation where the output content was not generated? If so, what are the possible causes and are there any recommended solutions? Thanks in advance.

Most of the time its script error , other things to take a look are NFS and network connections between nodes.

If this is really required check the compatibility of libraries on new OS , Just follow like you would upgrade any Production RHEL or Rocky Linux . I have never tested that upgrade till now.